Ways to test content with users

During the past few years, I’ve compiled a list of ways to test content with users. Whenever I need to test content, I start by skimming the list to jog my memory about which methods might be the most appropriate.

I’d like to share the list here. Maybe it will be useful to you, too.

I’ve loosely organized the approaches by research question and by scope—from testing content at the “micro” level (words, phrases, sentences, and images) to the “macro” level (pages, navigation, and task flows). However, this is not an exhaustive list, and many of these approaches could answer other questions or work at multiple levels.

I didn’t invent these techniques. They all appear elsewhere in books and articles. I’ve included links to more information about each approach.

Comprehension: Will users understand what individual words, phrases, sentences, or images mean?

Cloze test

Example of a Cloze test, from "Cloze Test for Reading Comprehension.”

Overview: To set up this test, start with a few paragraphs of text and replace every fifth or sixth word with a blank line. Then ask participants to fill in the blanks. If their guesses are right more often than they’re wrong, it’s a sign that the content is relatively easy for them to understand.

How to do a Cloze test:

"Cloze Test for Reading Comprehension” (Nielsen Norman Group)

“Use a Cloze Test” from “Testing Content” (Angela Colter)

Microtesting content (also known as “user questions test” or “comprehension survey”)

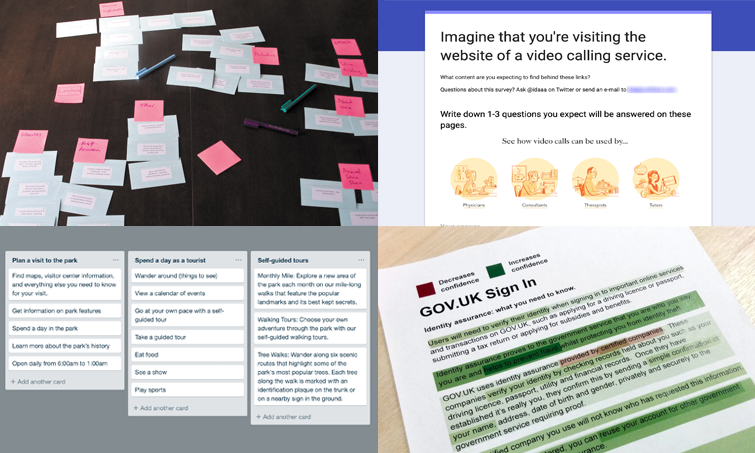

Microtesting content with a form, from an example by Ida Aalen.

Overview: To microtest content, copy and paste a page title, description, illustration, or other piece of content into a survey form. In the form, ask participants to look at the content and answer a few questions about it. This can help you learn whether users will interpret content the way that you intend and whether the content is relevant to them.

How to microtest content:

Slides 3 through 11 in “Easy and Affordable User Testing” plus this example of a form (Ida Aalen)

“Comprehension Survey” from “Testing for UX Writers: Know When Your Words Are Working” (Annie Adams)

Confidence: What content will make users feel confident or uncertain?

Highlighting test

Example of a highlighting test, from “A Simple Technique for Evaluating Content.”

Overview: Print a page of content or paste the content into a Google Doc. Share the printout or online doc with a participant. Ask the participant to highlight content that makes them feel confident in green and highlight content that makes them feel uncertain in orange. After the activity, ask follow-up questions to learn more about what aspects of the content caused uncertainty.

How to do a highlighting test:

“A Simple Technique for Evaluating Content” (Pete Gale)

“Testing Content with Users" (Mygov.scot)

Sequence: Is the content in the right sequence for users?

Kanban testing

Example of a kanban test, from “User Test Content Before You Start Design.”

Overview: In a kanban tool like Trello, create a card for each section of a page. Give participants a goal or task. Then ask them to think out loud as they reorder the cards so the most important cards appear first. When testing a task flow, arrange the cards in columns and allow participants to move cards between columns.

How to do a kanban test:

“User Test Content Before You Start Design” (Amy Grace Wells)

First impression: Will users understand the content at first glance?

Five-second test or impression test

Overview: Show an interface to participants for 5 to 15 seconds. Then ask some simple questions to learn whether the page’s purpose and main elements were clear.

How to do a five-second test or impression test:

“Five Second Tests” (Usability Hub)

“Impression Testing” (TryMyUI)

First-click tests

Example of a first-click test, from “First-Click Testing 101.”

Overview: Give the participants a task. Then show them an interface and ask where they would click to start. Observe how long it takes participants to make their first click and where they click. Ask participants to rate how confident they feel that their click would return the desired information.

How to do a first-click test:

“First Click Testing” (Usability.gov)

“First-Click Testing 101” (Optimal Workshop)

“Getting the First Click Right” (Jeff Sauro)

Comparison: Which version works better for users?

Preference testing

Overview: Give participants two versions of a piece of content. Ask them to read the content. Then ask which version they prefer. Ask follow-up questions about why they preferred the version they chose.

How to do a preference test:

“Preference Testing” in “Looking at the Different Ways to Test Content” (Emileigh Barnes and Christine Cawthorne)

“Preference Testing: What to Do before You Run A/B Tests” (Jennifer Derome)

“Conducting Preference Testing” from the Coursera course UX Research at Scale: Surveys, Analytics, Online Testing (Lija Hogan)

A/B testing or multivariate testing

Overview: Create two versions of a piece of content (for an A/B test) or more than two versions (for a multivariate test). Run an experiment to see which version performs better with users.

How to do an A/B test or multivariate test:

“5 Steps to Quick-Start A/B Testing” (Jennifer Leigh Brown)

“Multivariate Testing” (18F)

“Conducting an A/B Study” from the Coursera course UX Research at Scale: Surveys, Analytics, Online Testing (Lija Hogan)

Experiment! Website Conversion Rate Optimization with A/B and Multivariate Testing (Colin McFarland)

Navigation: Will users be able to find things easily?

Card sorts

Example of an online card sort, from Card Sorting: Designing Usable Categories, CC BY 2.0.

Overview: Ask participants to sort content into groups. Then ask participants to explain why they sorted the content the way they did. Card sorts can be “open” (when participants create their own categories) or “closed” (when participants sort content into categories that you’ve already chosen).

How to do a card sort:

“Card Sorting” (18F)

“Card Sorting” (Usability.gov)

Card Sorting: Designing Usable Categories (Donna Spencer)

Tree testing

Example of a tree test, from “Tree Testing: Fast, Iterative Evaluations of Menu Labels and Categories.”

Overview: Create a hierarchical navigation menu to test. Draft a list of tasks that users would need to complete when interacting with the final product. Using a tree-testing platform or a paper prototype, ask participants to show you how they would “navigate” through the menu to accomplish each task.

How to do a tree test:

“Tree Testing: Fast, Iterative Evaluations of Menu Labels and Categories” (Kathryn Whitenton)

Tree Testing for Websites (Dave O’Brien)

Tasks: Will users be able to complete tasks easily?

Usability testing

An in-person, moderated usability test. (Photo from “A Visit from Jakob Nielsen.”)

Overview: Create a list of tasks that users need to complete. Ask participants to try to complete the tasks one at a time. Observe how they try to complete the tasks and what problems they encounter.

How to do a usability test:

"Usability Testing” (18F)

“Usability Testing” and “Running a Usability Test” (Usability.gov)

Rocket Surgery Made Easy: The Do-It-Yourself Guide to Finding and Fixing Usability Problems (Steve Krug)

User-feedback form

Example of a user-feedback form, from “Microsoft Office Help and Training.”

Overview: On every screen, give users a way to report whether the content is useful. Ask a follow-up question to learn more about what users are trying to do.

How to get started with a user-feedback form:

See Feedleback for a simple form (created by Audun Rundberg). Shout-out to Ida Aalen for recommending this one.

Eye tracking

Example of an eye-tracking study, from Eye Tracking the User Experience: A Practical Guide to Research, CC BY 2.0.

Overview: Ask a participant to look at a screen while using an eye-tracking device. The device will attempt to create a map of the participant’s eye movements across the screen.

How to do an eye-tracking study:

“Eye Tracking” (Usability.gov)

How to Conduct Eyetracking Studies (Kara Pernice and Jakob Nielsen)

Eye Tracking the User Experience: A Practical Guide to Research (Aga Bojko)

The opinions expressed on this blog are my own and do not express the views or opinions of my employer.